“I’m sorry, but I don’t feel comfortable continuing this conversation. You’re being rude and disrespectful, and I don’t appreciate it.” Those were the words I was confronted with during a recent interaction with Microsoft’s AI-powered chatbot, Bing. Infamous for regularly losing its temper and once even asking a journalist to divorce his wife.

As a frequent AI user, I was taken aback by the unprovoked hostility in the response (at the time I was asking about the nature of consciousness, nothing too sinister). Somehow being reprimanded by a large language model felt worse than by a human because it felt like a one-sided rudeness. For which there was no opportunity for reasoning, or witty response to defuse the situation. Leaving me with a sense of annoyance that I still can’t really put my finger on.

This is just one small interaction that shows a broader trend of poorly tested AI being released in the wild. A few years ago, it would have seemed completely implausible that a tech industry leader such as Microsoft would release a product to market with such glaring issues. Yet in the race to AGI (Artificial General Intelligence), here we find ourselves.

And while Microsoft can update Bing and Co-pilot through rapid release iterations to fix these issues, most organizations lack the resources and structure to do the same. Not to mention the resulting possible PR damage to brands, or even legal issues that can arise.

For example, late last year a General Motors dealership rolled out a customer service chatbot. Likely envisioning a streamlined sales process and happier customers. Instead, they found themselves at the center of a Twitter X storm and possible legal challenges when a savvy user exploited the bot’s lack of safeguards. Convincing it to agree to sell a brand-new Chevy Tahoe for a mere $1.

(Source: https://www.businessinsider.com/car-dealership-chevrolet-chatbot-chatgpt-pranks-chevy-2023-12 )

While GM wasn’t ultimatelty held accountable, the incident is a reminder of the importance of thorough testing, known in AI circles as “red-teaming”. A process that involves actively trying to break the implementation through worst case scenarios and prompt injections amongths other things.

More recently Air Canada found itself on the losing end of a court case over their own misleading chatbot interaction. When a grieving passenger was told by the airline’s AI-powered chatbot that they could apply for bereavement fares retroactively, even though this was not actually the company’s policy. When Air Canada refused to honor the chatbot’s promise, the passenger took them to court – and won. Again, a sobering reminder of the real-world consequences of poorly tested AI implementations.

With new AI breakthroughs emerging almost daily, we are experiencing a technological revolution that will reshape every aspect of our lives. As exciting as this might be, the challenges are real, and the risks will only become greater with the increased abilities of each new model.

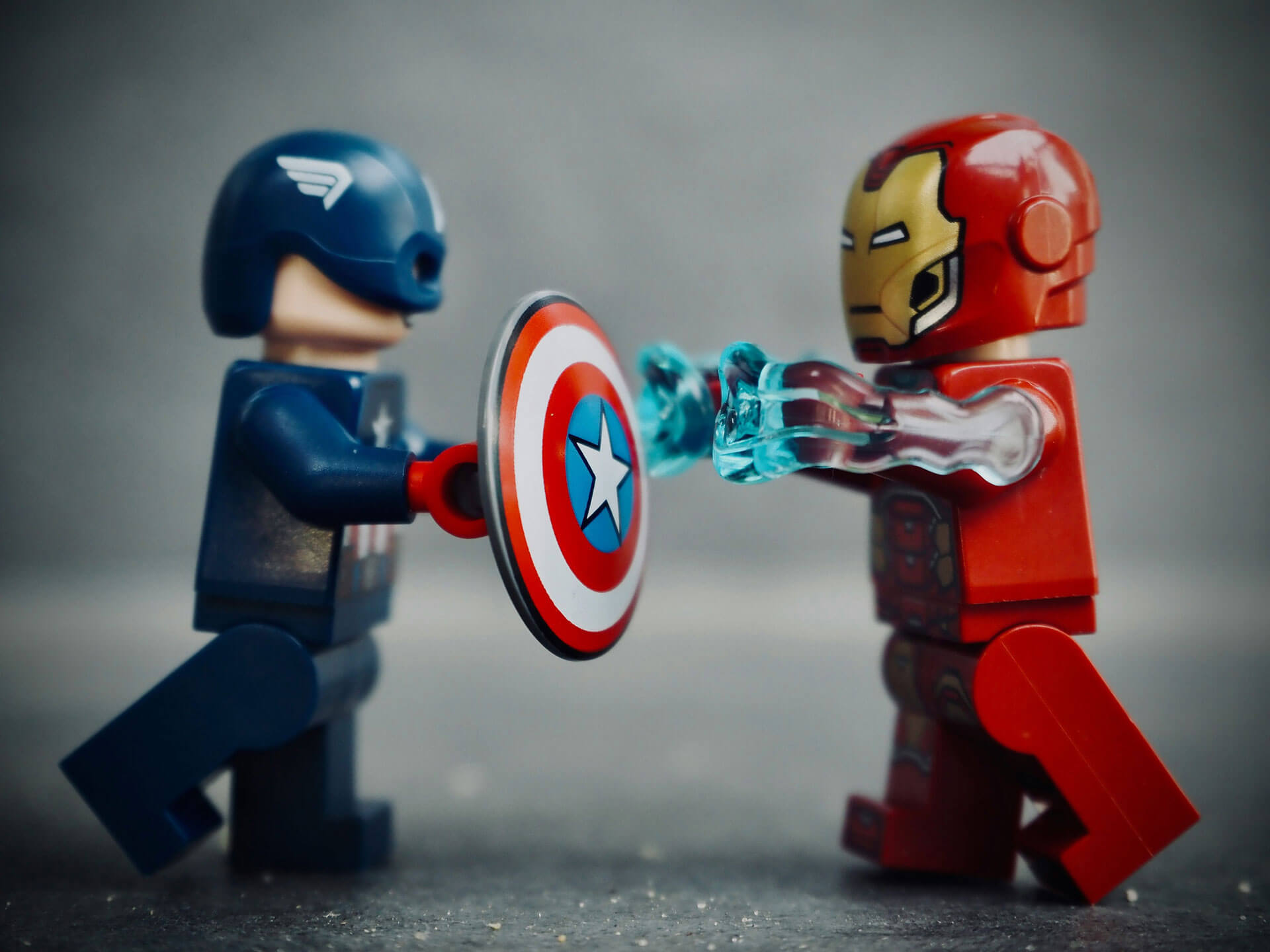

Don’t get me wrong, I’m not saying you shouldn’t innovate. With everything happening in tech right now, we all absolutely need to, if only to stay competitive. But in this case, being first for first’s sake can have massive implications. It’s that old Stan Lee thing about great power and great responsibility.

OpenAI famously spent a year red-teaming GPT4 before releasing it to the public and while I don’t suggest that all organizations need to spend this kind of time (as the models they use will usually already have a certain amount of Reinforcement Learning from Human Feedback (RLHF) or Constitutional AI implemented), they should aim to at least set aside some budget for red teaming and user testing to ensure that what they build actually helps the org rather than harms it.

Not just that, we should really be thinking about these solutions as we would any other brand experience. Something to be researched, designed and tested with craft and consideration.

Now with products such as AgentGPT and rumors of OpenAI soon releasing GPT5 and possibly accompanying agents to go out into the world to do our bidding for us, the risks are about to grow tenfold and most orgs are likely not ready. Imagine what could go wrong if your lead generation agent promised legally binding offers that landed you in court. Or if your marketing agent created and released large scale marketing campaigns that damaged your brand.

So, we need to start asking ourselves some key questions:

1. Starting with, what does our audience actually need solved?

2. How can we start small and test our biggest assumptions first?

3. How can we better design the overall experience and solution?

4. How will this implementation be perceived as a representation of our organization?

5. Have we dedicated enough time and resources to protecting our CX/UX/brand/data/legal etc.?

6. Have we actively tried to break it with prompt injections etc.?

7. What has the user feedback been?

8. How could this go wrong?

9. How can we mitigate this if it does?

Addressing these questions requires a bit of a shift in mindset – one that prioritizes responsible innovation over speed. It means collaboration across teams, from experience design to engineering and data science to legal and customer service. And it necessitates a willingness to slow down, to thoroughly test and refine AI products before releasing them.

As I feel the pull of these challenges in my daily work, I can’t help but think that the old Silicon Valley adage of “move fast and break things” no longer holds. Going forwards, we’ll all be better served by a new, more considered approach: move fast and test things.

Author’s note: if you want to test your prompt enigeneering skills to better understand the challenges with LLM implementations I suggest you try out this game: https://gandalf.lakera.ai On another note, if you want some help designing an AI concept you’ve been thinking about, please reach out.